Juxtaposing the words “correctness” and “creativity” tends to provide a Rorschach-like test for revealing minds seeking either dichotomy or synthesis. Naturally, either tendency can be useful according to circumstance.

For the dichotomers, the milieus conjured by this word-pairing defines their perceived differences. “Correctness” invariably connotes truth and reality operating according to independent laws that are ultimately discovered. “Creativity”, on the other hand, becomes perceived by novel departures from reality, an indifference to constraints with all its creations unequivocally invented.

For the synthesizers, activities surrounding correctness and creativity are clearly interrelated. The pursuit of truth is manifestly a creative process while notions of truth clearly infuse the creative act---although here perhaps less obviously. Great art is often said to communicate a higher truth but more recently, Generative AI has injected new notions of “correctness” into many acts of creation. The generative capabilities of GenAI, and in particular the iterative refinements of its hallucinations, suggests a new creative path towards revealing truth in all its guises.

There is an unassuming activity that sits at the intersection of these iconic concepts of correctness and creativity. It is an unheralded, unglamorous activity that nonetheless forms the backbone of correctness throughout computation and, as we will see, at least in another guise, throughout mathematics. This activity can clarify the new computational philosophy adopted by GenAI while also being surprisingly pivotal to AGI pursuits. It is surprisingly under-represented throughout education. The activity is an element of software engineering whose chore-like reputation belies its fundamental importance, a centrality that motivated the creation of CodeAssurance. This humble activity is .... unit testing.

Quantized truth in expert systems

Unit-tests crystallize the philosophical difference between traditional, deterministic computation and the non-deterministic computation now being popularized in GenAI. By favouring a “bottom-up” cumulative approach to computational construction, unit-tests help create software that incrementally and reliably improves. In contrast, GenAI’s “top-down” approach produces software capable of performing great conceptual leaps but with an uncertainty apparently antithetical to unit-test verification. To put it more elementally, without unit-testing’s guardrails, traditional software development becomes impossible whereas with unit-testing’s guardrails, GenAI soaring imagination becomes permanently clipped. Reconciling this philosophical tension is key to successfully assimilating GenAI into modern computing infrastructure.

The significance of unit tests relates to their corpuscular encapsulation of “understanding”--a notorious conception in AI that has been unceremoniously re-configured every time our notion of an expert system is upended. Intuitively, an expert is someone who, when pressed, is capable of giving a detailed, reasoned account of why a decision should be considered expert. Alternatively, a decision based on a person’s vibe would usually be greeted with serious reservations about this person’s expertise. And yet, it is an increasing reliance on a silicon vibe that has characterized a revolution in the creation of expert systems.

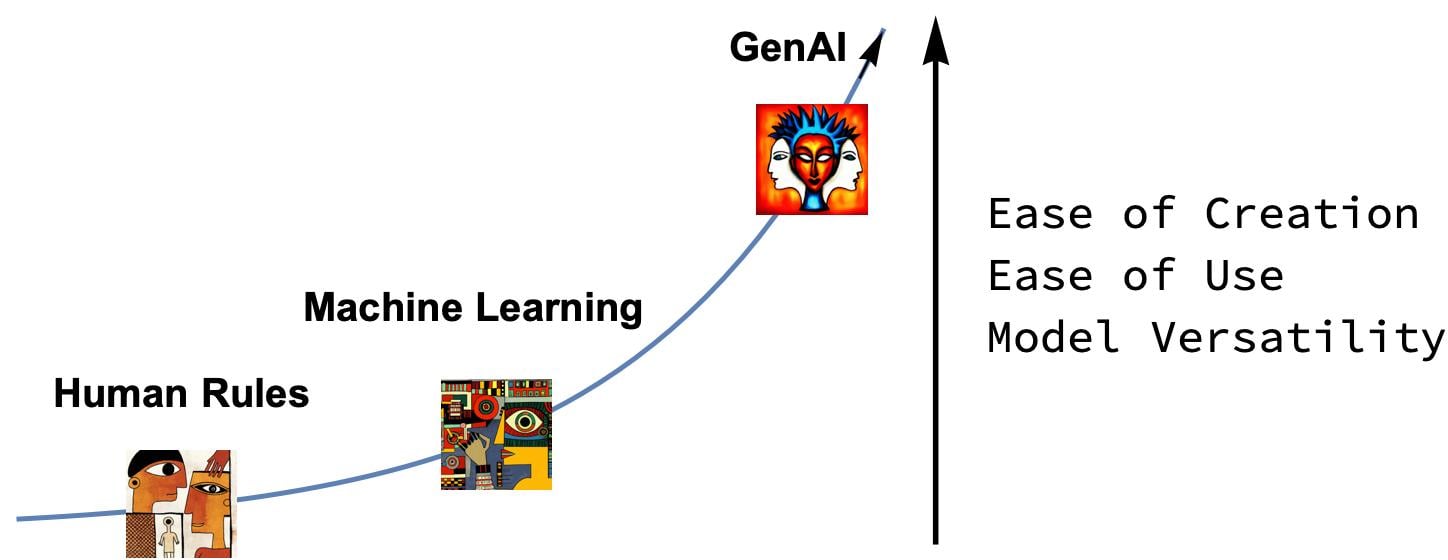

One lens through which the revolutionary impact of GenAI can be understood, is its capacity to dramatically lower the barrier to creating expert systems and in so doing re-define where and when they can be deployed. While there is an extremely high (compute/algorithmic/data) barrier to creating an all-purpose LLM, in a sense a generalized expert system, once this is in place, the subsequent creation of bespoke expert systems becomes transformed. Now, an expert system in any domain can often amount to simply supplying a few additional paragraphs of text. To appreciate what a sea-change this is, it’s worth recalling what it previously took to create tailored expert systems.

The first expert systems involved the “data” of human experience being distilled into a series of if-then statements aimed at capturing the logic typically used by a recognized expert. On top of this infrastructure for translating given situations into formalism runnable by these rules. Machine learning, and in particular, its supervised variety, circumvents core elements of this process, since, rather than relying on humans to articulate hidden rules defining their expertise, a version of such rules could now emerge automatically from analyzing sufficient amounts of tagged data. Machine-learning algorithms provided a generalized way of doing this but a certain amount of technical infrastructure was still required for the data tagging, interpretation and user-interaction.

GenAI-derived models dissolve previous barriers associated with human codification or collecting domain-specific datasets since this has effectively already been performed indirectly by ingesting multiple slices of the internet. Instead, one now simply describes general features of an imagined expert along with any specialized knowledge that he/she/it should also possess. It’s magical, surreal and difficult to understate just what a transformation this represents. Blueprints, it seems, are enough. An era of expert systems beckons all marked by their ease of creation, ease of use and model versatility.

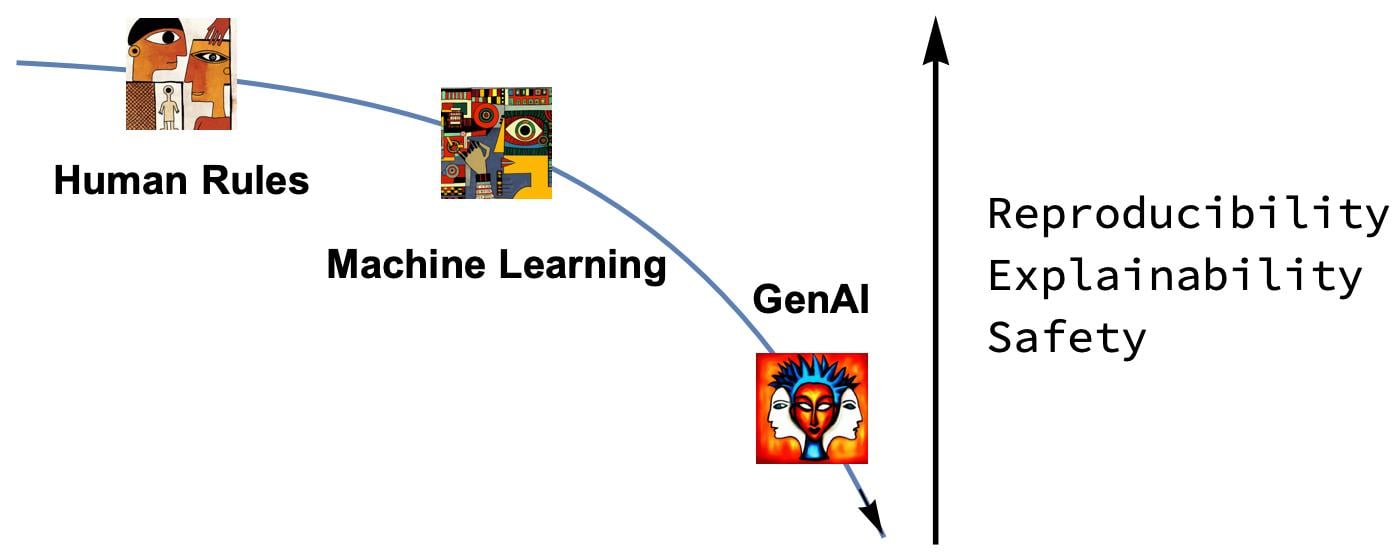

But what has been given up to reach these new heights? Nothing less than the qualities long-considered to form the bedrock of the computer revolution; scientific progress and societal cohesion, namely; Reproducibility, Explainability and Safety.

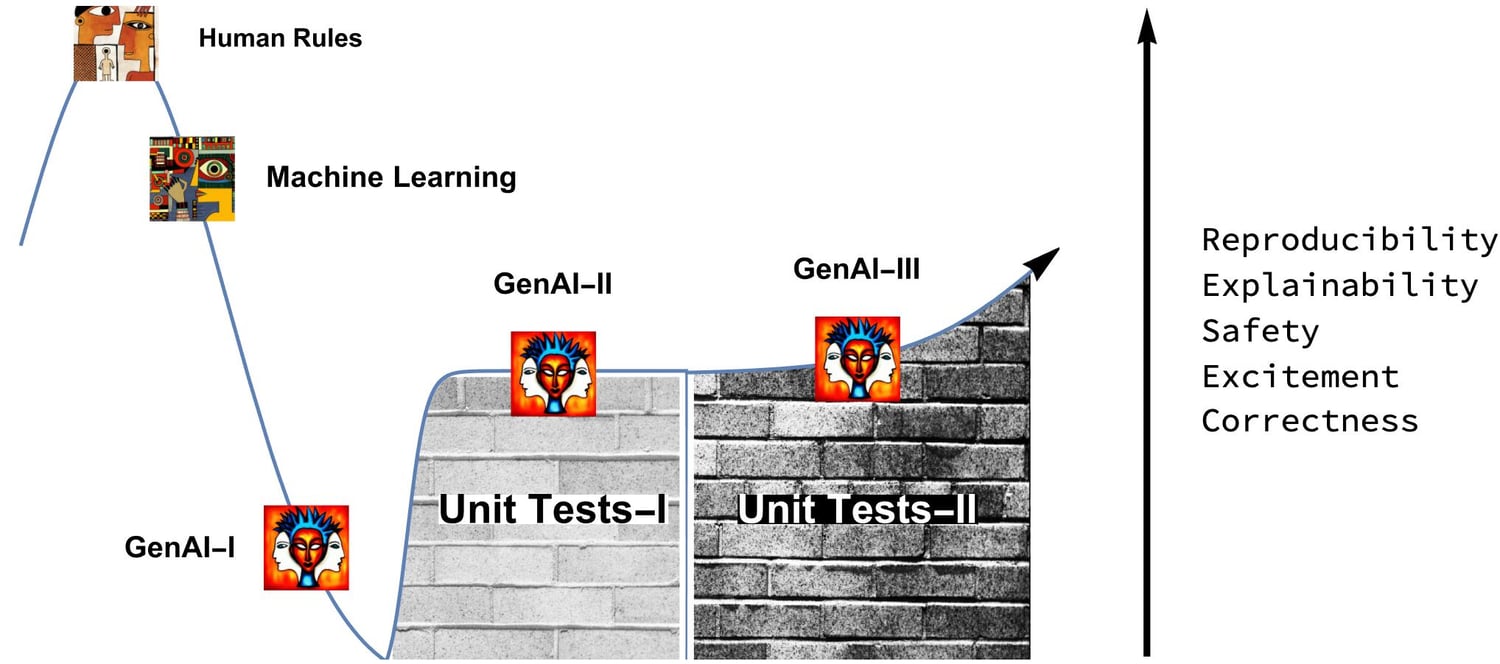

The advance made by GenAI has involved conjuring a new ease of creation, use and a versatility of expert systems while in the process compromising reproducibility, explainability and safety. The profound question now rudely protruding is just how fundamental is this trade-off? Can we keep most of the GenAI's benefits while ameliorating its downsides? Our humble unit-tests provide hope for answering this in the affirmative with its unique capacity to support GenAI on each of these suddenly more tenuous metrics.

Considering unit tests in the broader innovation context, they emerge as a key enabler of GenAI’s mainstream adoption due to their ability to directly address these key issues of reproducibility, explainability and safety. In Gartner’s hype-cycle of innovation adoption, initial exuberance inexorably gives way to dashed hopes as a trough of disillusionment sets in--hints of which are beginning to surface with GenAI primarily due to doubts about the presence of the aforementioned qualities.

Whether any innovation ultimately develops into a long-term technology invariably depends on it subsequently entering a slope of enlightenment as its essence becomes better understood. We are postulating here that for GenAI, this enlightenment critically depends on being able to integrate unit-tests, which, if successfully accomplished, is likely to stimulate a sharper rebound as their transformative power quickly becomes widely recognized.

Finally, Gartner’s hype cycle suggests long-term acceptance once a plateau of productivity is entered as applications become routinely embedded into the technological landscape. Again for GenAI it is natural to envisage these unit-tests stimulating new growth even beyond the standard plateauing but only by harnessing their next-generation incarnation. In particular, new conceptualizations of unit tests capturing stochasticity are needed as well as something not traditionally countenanced in science; a conscious, pervasive infusion of values. More on this shortly.

Reproducibility

The stand-out quality that make unit-tests so critical to mainstreaming GenAI is how they engender reproducibility. It is the quality that allows software to evolve. Software’s “deterministic” cumulativity stems from an inherent pluggability that is the means by which reproducibility propagates. This is because the reproducibility of any piece of software contributes to the reproducibility of any software it plugs into and conversely, its reproducibility depends on the reproducibility of any software plugged into it. In a quite fundamental way then, reproducibility begets reproducibility but break it at any juncture, and the whole paradigm comes crashing down.

What this means is that instilling semblances of reproducibility is absolutely critical for GenAI products to become building-blocks in any future technological tower. Without such reproducibility, GenAI is destined to teeter atop any infrastructure as it vainly attempts to harness a tantalizing linguistic potential.

Of course, implicit in all the perceived advantages of a GenAI-derived expert system is the actual presence of expertise; that, however smoothly it generates, a GenAI-system ultimately exhibits expert-level judgement more often than not. Such judgement doesn’t have to necessarily exceed the accuracy of humans or previous expert systems; such are the implementing advantages GenAI enjoys, merely getting sufficiently close is likely to still yield transformative benefits.

But what is both incredibly exciting and deeply unnerving is the very real prospect of GenAI-derived expertise not only being easier to create and use, but also it seems, being able to wildly exceed the judgement of both humans and all previous automated systems--(and this is before even contemplating even higher judgement levels from hybrid GenAI-human reasoning).

In a broad, abstract sense, GenAI is replicating what human experts do when distilling individual experiences into codified rules but is doing so on an unprecedented scale. Hence, the difference with GenAI’s “experience” is that it constitutes a sizeable fraction of our species’ entire historical output. While such “experience” is more general and therefore inevitably less precise, its sheer volume is appearing increasingly capable of overcoming such imprecision to form deeper “intuitions” hitherto impossible within a single consciousness. Humanity is now scrambling to understand the ramifications, an integral part of which involves coming to terms with a new, almost alien-like notion of correctness that seems utterly impervious to analysis.

While the rules of the first-generation expert systems were followable by design--the rules themselves representing units of human understanding--the more obscure nature of its successor, machine-learned expert systems, created new quandaries about an expert system’s bias, underlying assumptions or predictive prowess. An entire field, Explainable AI has emerged to manage such concerns but GenAI puts such disquiet on steroids.

The black-box nature of GenAI-derived systems represents an even deeper obscurity since the datasets on which its models are trained are typically unknown; the details of its model-building algorithms hidden, the computing resources applied left unspecified while the stochasticity introduced is concealed within coarse descriptors like temperature.

Unit-tests on the other hand, offer a powerful antidote to this enveloping nebulousness. They can engender a new version of reproducibility that otherwise appears beyond GenAI’s stochastic ken. By configuring unit-tests to circumscribe a system’s fine-tuned randomness, either by proscribing allowable ranges or by projecting stochastic output onto precise forms, a level of determinism can be injected into the output of a GenAI system. Developing a suite of unit-tests that a GenAI system is known to consistently pass, can provide a base-line for explainability and safety that is progressively tunable during ongoing interactions. In so doing, the cumulative improvement enjoyed by traditional software development becomes accessible to new software systems incorporating GenAI components.

Unit tests then, are ultimately a technology of cumulativity that, in concert with Turing completeness, has been largely responsible for the computation powering modern civilization. Turing’s conception of a universal machine might have been the original flicker inspiring the notion of general-purpose programs but without the means to knit together arbitrary algorithms, software of any significant sophistication could never have gotten off the ground. Universality might guarantee algorithmic possibility but it doesn’t, in advance, furnish precise knowledge of what algorithms will ultimately be needed. Such knowledge only comes to light gradually through interacting with the environment; universality therefore, on its own is insufficient; universality+cumulativity however, a computing-revolution makes.

The indispensable complementarity of universality+cumulativity is immediately apparent from casting Turing’s 1930’s advance as a linguistic one. The traditional view of Turing’s breakthrough, one that formalizes the notion of an algorithm, can be alternatively appreciated as the invention of a new language-- the first, self-applicable, computing language. By definition, languages arrive pregnant with possibility both in terms of their innate expressiveness and a means for their unlimited growth. Providing the means to computationally grow is what motivates and defines unit-test technology but the nature of such growth embodies a cumulativity beyond the organic proliferation ordinarily seen across natural languages. This is primarily due to the extra power of words doubling as machines. Descriptions built upon descriptions gives you a descriptive richness but one quite unlike the functional richness and resulting power that emerges when machines are built upon machines. There is perhaps only one other field characterized by a similar type of unmistakable cumulativity, mathematics, and the links between its growth, unit-tests and truth is no accident and therefore worth exploring.

The mathematical nexus

Unit tests are, at least in what initially appears to be a loose sense, a generalization of mathematical axioms and theorems. Unit tests define the core facts and interrelationships of software systems in the same way axioms and theorems define the truth and connections in their respective mathematical fields. And while mathematical fields typically involve cleaner foundations, unit-tests are forced to contend with the messier environments in which software applications typically operate. And because of the cleaner, more abstract, starting points of mathematical theorems, they tend to carry a broader applicability more reminiscent of eternal truth.

Equating the growth of mathematical knowledge to the compilation of unit-tests operates at multiple levels also hints at further connection with fundamental computation. For example, it is not just that the dependency-network of lemmas, theorems and corollaries of mathematics appears unit-test-like, but also that this network, once implemented in an actual computational system, must itself ultimately be backed by actual unit-tests. This means that when incorporating a mathematical module, GenAI not only depends on the consistency of mathematics for its own correctness but also on the program-correctness of the software implementing such consistency. But this begs the question; if unit-tests are providing such program-correctness from where does its consistency spring? mathematics? computation? We are immediately confronted with issues surrounding infinite regress, self-referentiality and unclear foundations, ordinarily the province of philosophers, logicians and computer-scientists but now urgently needing practical resolutions to more systematically refine GenAI’s hallucinations.

Despite these philosophical thickets, the road to advancing GenAI has nonetheless frequently been envisioned as one paved with mathematical integration as a bare minimum (without also discounting contributions from other fields). The hope behind the oft-named neurosymbolic hybrids, for example, is that by blending mathematical repositories with GenAI’s creative hallucinations, more human-like, self-correcting reasoning can begin to emerge. Indicators of such emergence include when, for example, GenAI defeats all-comers in human mathematical olympiads or their artificial counterparts while at the frontier, there is fresh funding impetus for revealing this AI-mathematics-reasoning nexus.

The historical example and legacy of regression-testing powering the software revolution, already points to the central role to be played by unit-tests in GenAI’s long-term evolution. What is intriguing however, is that better understanding why unit-tests seem so necessary; by contemplating how and when they can be usurped by deduction offers clues for nudging GenAI’s creative hallucinations towards grounded truth.

To GenAI+

The technological significance of GenAI is undeniable given the considerable fraction of humanity now applying it on a daily basis. Consequently, improving this functionality by improving one’s ability to tap into mathematical truth when refining GenAI’s trademark hallucinations constitutes an important skill that is captured in the following two assertions:

- GenAI+ is realizable by connecting to stores of codified mathematical knowledge.

- Unit tests underpin the connection of this codified mathematical knowledge.

While our explorations in the meaning, significance and implications of GenAI were chosen to be performed in the Wolfram Language primarily because of its inherent literateness, it so happens that this language also houses arguably civilization’s largest base of codified, mathematical knowledge. As a bonus, it also integrates a significant repository of general codified knowledge, namely the one powering Wolfram Alpha. In combination, what this means is that currently there seems no more powerful and natural technology to underpin GenAI's transformation into GenAI+.

One of the most controversial questions regarding GenAI’s application is its level of “understanding” but a more immediately-relevant measure is how such understanding changes both through direct action and through technological iterations. At this point, it is customary to rush towards AGI speculation but it is perhaps worth first considering the incredible challenge this represents and how such a moonshot tends to overlook more proximate gains. It also provides a refuge for mistaken views that any understanding worthy of its name, cannot, even in principle, emerge stochastically. In this belief system, GenAI’s arrival is less a breakthrough and more akin to climbing to a higher branch on a certain cognitive tree, or, if willing to concede somewhat more, akin to adding another few levels to a cognitive sky-scraper. Going all the way to the AGI-moon however, so the argument goes, will be impossible via similar upward forays and instead will require entirely new cognitive rockets.

I find it incontrovertible that GenAI’s models exhibit significant, unprecedented and useful “understanding”. After interacting with GenAI for over a year now, to me its responses display unmistakable signs of rationality and intelligence. That this doesn’t currently rise to the level of human intelligence, spouts hallucinations, or is not animated by human impulses is, in some sense, a category error. It is always tempting to anthropomorphize new capabilities but at this historical juncture, it is perhaps more productive to conceptualize GenAI as catalysing an Amplified Human Intelligence.

Misguided assessments of GenAI’s “intelligence” usually fixate on judging a singular response to artificial questions. A better measure is to instead consider how your own dialogues with GenAI can help you answer deeper more personally meaningful questions. In other words, evaluating to what extent GenAI amplifies your own intelligence better captures its current value. The better educational courses in GenAI reflect this viewpoint as they emphasise such interactivity by covering chain-of-thought or few-shot prompting and other variants.

At a more abstract level, the extra information in chain-of-thought or few-shot prompting, is effectively adding relevant context to the GenAI in the form of reasoning exemplars. This extra context is acting somewhat like collections of amorphous unit tests defining minimal functionality that a desired GenAI should be able to perform. The power of mathematical reasoning however, is that such context, doesn’t have to be repeatedly injected via an external source but instead can be automatically derived or logically deduced. One way of thinking about reasoning therefore, is as a technology for automatically generating context; a new form of contextual economy. And just as succeeding versions of GenAI models advance this economy by requiring less and less context for a given query, they can be said to possess improved understanding. In this way, we can begin to appreciate how a GenAI’s reasoning power becomes turbo-charged once augmented with pervasive context-creating deductions.

Once turbo-charged with a mathematically-based reasoning power, the question then becomes how far can this be pushed; just how much generality can mathematics alone imbue? This answer to this question will likely determine how much of the new “understanding” ushered in by GenAI inevitably plateaus or whether we are in for a steady, inexorable rise in silicon intelligence as larger datasets and computes are thrown at its models. In short, GenAI’s civilizational potential seemingly depends not only on its ability to connect to codified mathematical knowledge but also on its capacity to create it.

To AGI (singularity?)

Although appearing in multiple guises, one widely canvassed pathway towards AGI chains together the first three cascading assertions to which we have added a fourth:

- GenAI can reach AGI when endowed with the capacity to broadly reason.

- A capacity to broadly reason is predicated on the capacity to reason mathematically.

- A mechanized capacity to reason mathematically realizes automatic theorem provers.

- Unit tests underpin the realization of all automatic theorem provers.

All of the above assertions represent philosophical positions that are themselves open to debate but they also represent a certain consensus mainly due to mathematics’ ability to abstract out the reasoning process as well as its manifest success in modelling the natural world. What is made clearer in connecting AGI to ATP so specifically however, is the potential activation of a positive feedback loop. It suggests a new bootstrapping, or meta-awareness that researchers have long identified as a core component of intelligence. It also however, brings into sharp focus the magnitude of the challenge. If AGI’s emergence depends on the creation of an ATP, then this indicates that it is going to be at least as difficult a problem as that with holy-grail-like status for nigh-on a hundred years.

But what if GenAI provides the key piece of technology to finally realize ATP? What if GenAI’s indefatigable linguistic intuition in combination with say a Wolfram plugin (and some further scientific insights) was somehow able to codify on a massive scale, all the inferences (or deductions) traditionally performed throughout mathematics? A functioning, even rudimentary ATP so constructed could then, in turn, be harnessed to define a GenAI++ to further correct its own creative hallucinations as part of generating a better ATP which then, in turn, engenders a better GenAI+++ and so on as a positive feedback loop ratchets up. The hope (fear?) is that such a self-reinforcing progression can but culminate in AI’s holy grail, a spiral of self-improvement ending in the utopia (dystopia?) of science fiction’s singularity.

Whether or not one believes that the end-result of such a self-reinforcing chain-reaction would really ascend to the level of the singularity, it’s hard to avoid the conclusion that such a cycle nonetheless feels more revolutionary than evolutionary. The interplay set in train seems to define an acceleration of a completely different nature than that which arises from “merely” integrating mathematical knowledge to realize GenAI+.

Where’s our universal theorem-prover?

It remains a confounding mystery at to why automatic theorem-provers have yet to usher in a golden mathematical age. The origin story behind Turing Machines is so steeped in number along with the subsequent equivalences via the Church-Turing thesis that ATP’s prompt emergence seems almost pre-ordained. Such an eventuality however, has stubbornly failed to materialize. Some have attributed this ongoing absence as reflecting something deeper perhaps even stemming from a fundamentally epistemological, almost numinous belief in the human spark needed to mathematize--one forever beyond formal computation. On the other hand, there have been significant gains in computer-algebra systems and in narrower domain-specific fields so the puzzle is why these have failed to extend into more general mathematical reasoning. There are three scientific explanations for ATP’s non-appearance that merit serious consideration:

- Gödel incompleteness

- Computational complexity

- Linguistic self-reference

The limitations of the first two, Gödel-incompleteness and computational complexity, have been intensively researched as part of establishing mature fields in logic and computer science but the third, what I’ve coined here, linguistic self-reference, is rarely canvassed, if perceived at all so we will discuss this first. To be clear, the narrative here is not arguing that these three explanations represent fundamental barriers to creating an effective ATP but rather, that they capture critical aspects of its instantiation that need to be addressed and in particular, can (only?) be addressed by the judicious use of unit-tests.

Linguistic self-reference rests on the very simple premise that all languages possess an unavoidable circularity. For example, take a word indexed in any standard dictionary and then select any word from the sentences that define that word’s definition(s). For this selected, definitional word, repeat this process by selecting a word from its definition(s) and continue until the sequence of words so created contains a repeat. In a finite dictionary, such a repeat must always occur which has the implication, rather disturbingly, that every word in a language contains at least some level of self-referentiality and therefore every word is defined, to some degree, in terms of itself. Implicit in such an exercise is that linguistic meaning must be inherently circular. In both mathematics and computation, self-referentiality is often a red-flag as mathematical deductions are studiously designed to never surreptitiously assume a final result while unanticipated recursion, the consequence of such naming circularity in programming is the bane of many a coder.

Such considerations might seem like trivial word games compared with the serious study of foundations but we already have a striking example of how such riddles end up having far-reaching consequences. Take the Cretan seer, Epimenides, and his eight-thousand year old account of a compatriot uttering the famous cry “All Cretans are liars”. The self-referentiality here leads to contradictory conclusions about the truth or otherwise of this simple utterance but its paradoxical essence lies at the heart of the Gödel’s famous incompleteness theorem. In arguably the longest cross-millennial academic collaboration ever undertaken, Gödel’s breakthrough paper quotes Epimenides and his paradox for its underlying justification but there is a disconcertingly small and graspable amount of codification lying between this ancient antinomy and Gödel’s incompleteness that shattered humanity’s intuition about how mathematical truth could be verified.

Linguistic self-reference starts with the same discombobulation born from word-play but operates at a finer level of granularity using words instead of sentences. Soon we will see some immediate computing implications of this self-referentiality (a more formal treatment of managing this “computational incompleteness” in the WL is to appear as well as its manifestations in CodeAssurance). Suffice to say for now, the claim is that while linguistic self-reference is immediately graspable in word riddles, any sufficiently powerful foundation or associated reasoning-engine, contains within its midst, a deep-seated self-referentiality that is inextricably linked to its robustness. More loosely, it seems as if one cannot simultaneously have unadulterated correctness and creativity, one needs to give a little in each direction to ensure progress.

The natural reaction here is to pass these thorny foundational issues off to the cloistered ruminations of mathematicians, logicians and philosophers who surely, one might have hoped, have sorted all this out by now. Alas, despite progress in identifying critical faultlines, nailing what reason is remains a mystery as symbolized by this continued ATP absence. Philosophically framed, the journey to AGI then, may entail the construction of an ATP, a daunting challenge given this itself means nothing less than finally establishing a secure foundation for mathematics, a task akin to deciphering just what mathematics is. We’d better sort this out.

What is mathematics?

There is one intellectual tradition that, perhaps above all others, most consistently lays claim to incontrovertible truth and that is mathematics. While the theories of other scientific fields face the constant threat of repudiation from newly revealed observations, mathematical theorems seem to possess this more impregnable aura. For example, when a mathematical theorem is retracted, it typically follows from detecting a false (human) deduction rather than newly revealed, contradictory evidence. Or at least, if new observations conflict with a previous “proved” result, faulty deduction(s) in this previously assumed “proof” had better be found lest the entire mathematical edifice collapse. In other words, the logical consistency of mathematics is sacrosanct for without this calling card, it fails to be mathematics.

The central question then, is from where does this avowed consistency emanate? Up until now it has overwhelmingly been a sociological phenomenon given that mathematical proofs are ultimately socially-agreed constructs. The mathematical reasoning that allows a given theorem to enter the cannon is first subjected to peer-review using a community-approved standard about what reasoning steps are allowed and what are not. And yet, it has always felt more than this, the reasoning steps in mathematics never feel culturally-specific instead seeming to radiate a certain objectivity redolent of platonic truth. That infinite primes exist without human comprehension needing to perceive them so, hardly seems like platonic overreach. Also platonically modest is how the primes' infinitude exists for a sequence of connected reasons, a sequence that still seems to exist irrespective of human appreciation meaning that the proof of the primes' infinitude, also seems to possess this independent, objective existence.

Indeed, so strong is this feeling of tapping into an independent truth when reasoning mathematically, that it is natural to want to capture these allowable reasoning manoeuvres in any form but particularly into symbolic form. Firstly, from a pedagogical perspective, making explicit the core or kernel of reasoning that drives mathematising is a crucial part of mathematical education solidifying the subject’s sociological origins and cross-generational evolution. Secondly, the spectacular success of algebra, the science-transforming practice of turning linguistic expression into symbols, can’t but invite hopes for a similar jackpot by doing likewise through the development of a “reasoning calculus”. But now we can see the eddies of our linguistic self-referentiality ominously forming--using mathematics to study mathematics--but we barrelled ahead anyway with Boolean Algebra and then logic as the early forerunners of this new reasoning language.

Symbolising actual reasoning steps used in mathematics became even more tempting at the turn of the last century because it was seen as a means to settle a brewing disagreement about just what subject-defining reasoning moves were allowed when practicing mathematics. Surely elevating proof by turning the lens of mathematical correctness on its symbolic expression could not only resolve these present disagreements but do so permanently by securing the subject’s foundations in finally making the subject less beholden to sociology, human passion and ego. If only … (to be continued in Part II)

Comments ()